Food for thought

New EU Directive on Misleading Green Claims

The European Council has adopted a new directive nicknamed the “Greenwashing directive” to protect consumers from misleading sustainability claims and other unfair commercial p

Preparing your CSRD report

Preparing for CSRD? Join the club of over 50,000 companies required to report on sustainability! Talking to companies preparing for CSRD, we have observed that their biggest concer

FAO 1.5°C Roadmap: The net-zero plan for food

Discover the FAO 1.5 °C roadmap for transforming agri-food systems and achieving sustainable goals. Explore sector-specific solutions across domains like livestock, healthy diets,

The net-zero transformation is data-driven

Tackling climate change is complex and change leaders often remark that there is no silver bullet. At CarbonCloud we not only agree with this point; we actively engage with it. But

ESG Software Guide: The best tech stack for every company

Discover the top ESG reporting solutions in our expert guide! Find out how to choose the right ESG provider for your sustainability strategy. What Is An ESG Software? Who Needs ESG

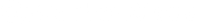

Climate Action in the Food Industry: Half-Baked Efforts as 44% of Companies Remain at Initial Maturity Level

An in-depth analysis of 83 global companies in the food system reveals mixed levels of maturity along 6 dimensions of climate performance, but with a projected positive trajectory

ESG Reporting: Navigating ESG frameworks & requirements

Learn all about ESG reporting standards to promote transparency, impress your stakeholders, and boost your brand. What Does ESG Reporting Mean? Why Is ESG Reporting Important For C

Food industry & Scope 3: A state of the art of food supply chain emissions

Scope 3 emissions are taking (rightfully and finally) center stage and the food industry is talking the talk. Since 2016, Scope 3 disclosures from food companies in CDP have increa

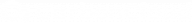

How to set SBTi FLAG targets – The Food Edition

The Forest Land and Agriculture Guidance from SBTi had retailers and food producers on the edge of their seats until its release in September 2022. Since April 2023 FLAG targets ar

GUIDES & EBOOKS

Your climate strategy curriculum

Immerse yourself in climate knowledge from our guides and ebooks and emerge an operational guru

Clients

Triumf Glass boosts its sustainability work through collaboration with CarbonCloud

Triumf Glass and CarbonCloud partner up towards a more sustainable future. Through CarbonCloud’s research-based software service, Triumf Glass boosts the transparency of its

Moma Foods Partners with CarbonCloud to Drive Sustainable Impact

We’re pleased to announce our partnership with UK-based Oat drink company MOMA Foods. This collaboration marks a significant step towards achieving MOMA’s mission of of

Bidfood and CarbonCloud Join Forces to Initiate Transformation in Supply Chain Carbon Management

Bidfood, one of the UK’s leading foodservice providers, has announced a strategic collaboration with CarbonCloud, a pioneer in climate intelligence technology. This partnership m

Menigo: How to manage Scope 3 emissions and engage suppliers

Menigo, the Sysco subsidiary in the Nordics, established itself as a Scope 3 emissions pioneer, moving forward from what the majority of wholesalers, distributors, and grocers are

Uhrenholt applies CarbonCloud Machine Learning to all its food products

Global food leader, Uhrenholt, kickstarts data-driven carbon management along its supply chain with CarbonCloud’s carbon footprint calculations for all its products. With this in

PlanetDairy makes great-tasting hybrid cheese with a 40% reduced climate footprint

“But cheese though”, many sigh in despair. PlanetDairy is here to remove the but and the despair, granting us Audu, a cheese experience minus 40% of the emissions. Amen. Al

CarbonCloud partners with food service challenger Out of Home

CarbonCloud is the climate intelligence platform specializing on climate footprint calculations of food products. The company is now working together with Out of Home to deliver th

Nordic Seafarm: A jack of all trades ingredient, an ace in climate performance

What’s your first thought when you think of seaweed? And no, it can’t be maki rolls! Nordic Seafarm is here to make Nordic sugar kelp the top-of-mind ingredient. Multifaceted,

Cérélia: High experience standards, one recipe switch, and a 30% reduced climate footprint

Cérélia is a global leader in fresh dough and ready-to-eat pancakes headquartered in France but expanding globally, in both production and distribution. Cérélia has 12 factorie

Science

Why is comparability important in carbon footprints?

Comparing different climate footprints may seem nitty-gritty, sustainability navel-gazing. In actuality, the comparability of climate footprints is central to what humanity can do

What are Scope 1,2,3 emissions?

The terms Scope 1,2,3 emissions are casually thrown around in the sustainability space – a bit too casually if you ask us. If you didn’t Google it the first time you heard

ESG Standards Explained: Corporate Sustainability Reporting Directive (CSRD)

The European Union’s Corporate Sustainability Reporting Directive (CSRD) is mobilizing ESG reporting for the food and beverage market. But there is another good reason to look at

Sustainability Standards Explained: TCFD

The Task Force on Climate-Related Financial Disclosures (TCFD) Recommendations is the shadow ruler of ESG reporting. While the name “TCFD” may not ring a bell to everyone, the

Sustainability Standards Explained: SBTi

SBTi is the Pete Davidson of Sustainability Standards: rapidly and consistently up-and-coming, charming and approachable, a must-have for aspirational influencers, and fresh in the

Sustainability Standards Explained: ISO 14000 family

Nothing says Standards like the International Organization for Standardization, also known to the business community as ISO. ISO is a popular and globally credible provider of stan

Sustainability Standards Explained: GHG protocol

Food and beverage producers, retailers, you and we at CarbonCloud have the same headache: There is currently no universally agreed-upon standard for sustainability. There isn’t a

How do deforestation emissions work in food?

Deforestation is one of those topics that, as actionable and measurable as it is, transcends business and numbers. For most of us, images of thinning forests, the faded shades of g

We saw 0kg of CO2e: The case of Two Raccoons, fruit surplus, and allocating emissions

Two Raccoons are winemakers with a purpose… or rather, winemakers who give a purpose. Two Raccoons makes delicious wines from good fruit surplus which otherwise would go to waste

Newsletter to-go?

Our special today is our Newsletter, including snackable tips, hearty climate knowledge, and digestible industry news delivered to your inbox.

Inside CarbonCloud

CarbonCloud Product Updates – February 2024

It’s Oscars season – Might we nominate CarbonCloud for this month’s shift to climate footprints that are so high-definition they break new ground? Climate Footprints in High

CarbonCloud Product Updates – January 2024

It’s 2024, the year ESG reporting for food and beverage companies enters its sophisticated era. CarbonCloud ushers this with new ESG reporting features and data aggregations. Thi

Former Amazon Head appointed Chairman of green tech startup CarbonCloud

Olaf Schmitz, seasoned entrepreneur and former Head of business development for Amazon Europe, joins the Swedish climate intelligence platform. CarbonCloud is the food industry’s

Comparing apples & oranges: Climate footprints of 10,000 food products revealed

ClimateHub, the free-access carbon footprint database by CarbonCloud, digitally releases the climate footprint of 10,000 branded food and beverage products at the American grocery

CarbonCloud raises €7.5 million in Series A to become the food industry’s leading climate intelligence platform

CarbonCloud, the climate-tech SaaS solution for the food industry secured €7.5 million to expand their market position as the leading climate intelligence platform. The financing

Under the hood of Automated Modeling: It’s not magic, it’s just appropriate

At CarbonCloud, our goal is to solve the climate crisis by highlighting data-driven, impactful emissions reduction decisions for the food and beverage industry. Automated Modelin

Explorer–Navigator–Trailblazer: Product packages with a story

You are casually browsing through the CarbonCloud website, you find some interesting stuff and you decide the check the Product packages page. Or, you are already talking to one of

Automated Modeling or How the food industry stopped the LCA and loved machine learning

Here are the facts:– In 1969, Life Cycle Assessment was born, measuring the climate impact of the CocaCola packaging. 53 years later, little has changed: The food industry is sti

The CarbonCloud Third-Party Verification

Third-party verification is an important confidence boost in your climate footprints and strategy. So no wonder we get questions about it! Our clients –and their clients– are i

CLIMATE MATURITY ASSESSMENT

How net-zero ready are you?

Take CarbonCloud's 3' Maturity Assessment quiz to find your starting point and get tailored tips and action points on how to step up climate work throughout your organization.